ISG Talks are sponsored by Couchbase.![]()

Sushant Jain : Large scale and low latency data distribution from database to servers

DBH 6011Many applications at Google are structured with data stored in a transactional database (source of truth) and same data being required by servers distributed worldwide. For efficient and fast computation servers store this data in memory. Further, the database is changing continuously and we need to update the in-memory view of these large number of servers in real-time. For example, in Google Search Ads application we have Advertisers configuration stored in a database and this data is loaded in the memory of various servers to compute Ads in a scalable and fast way. In this talk, we describe our solution to this data distribution problem and the challenges that we encountered in providing a highly reliable and low latency service.

Dr. Andrey Balmin and Mayank Pradhan (Workday): Workday Prism Analytics: Unifying Interactive and Batch Data Processing Using Apache Spark

DBH 3011Abstract: Workday Prism Analytics enables data discovery and interactive Business Intelligence analysis for Workday customers. To prepare data for analysis, business users can setup data transformation pipelines in an interactive, self-service, modern data prep environment. Thus, Workday Prism Analytics needs to run three types of scalable data processing applications: "always on" query engine and data […]

Vinayak Borkar (FireEye Inc.): The X15 Machine Data Management Platform

DBH 4011ABSTRACT: Machine Data (aka Log Data) is continuously produced by applications and devices as a result of human-computer and computer-computer interactions. Although most of this data was initially generated for ad-hoc human consumption to aid with debugging and troubleshooting systems and deployments, their systematic treatment using well-known data processing techniques can unlock valuable insight about operations […]

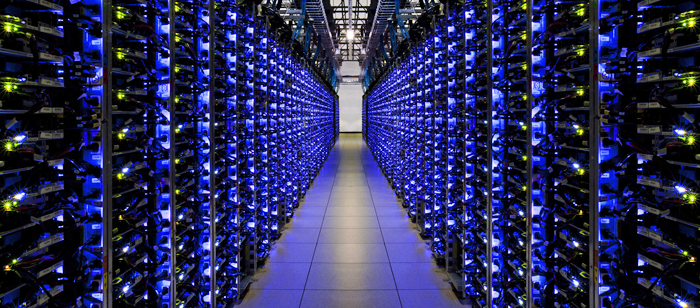

David Lomet (Microsoft Research): How Data Caching Systems Succeed

DBH 4011Data in traditional "caching'' data systems resides on secondary storage, and is read into main memory only when operated on. This limits system performance. Main memory data stores with data always in main memory are much faster. But this performance comes at a cost. In this paper, we analyze the costs of both in-memory operations and secondary storage operations where data is not "in cache''. We study the performance impact of cache misses on caching system performance. The analysis considers both execution and storage costs. Based on our analysis, we derive cost/performance results for a data caching system and a main memory system to understand where each demonstrates the best cost per operation, what is driving the cost differences, and the scale of the differences. This analysis (1) provides insight into why data caching systems continue to dominate the market; (2) points to higher performance that does not rely on simply increasing main memory cache size; and (3) suggests a path to lower costs and hence better cost/performance.